Inspired by Gus Pelogia's awesome post on using Screaming Frog and ChatGPT to map related pages at scale, I wanted to try re-engineering the script to instead find new internal link opportunities for specific orphaned or under-linked pages.

Mike King wrote all about the SEO use cases for vector embeddings which is a great read to find out more about the concept of vector embeddings and their importance in SEO and content analysis. It’s a great argument that vector embeddings, which represent words or concepts as numerical vectors, offer significant advantages over traditional keyword-based methods.

In this script, we're using vector embeddings and cosine similarity to identify relevant internal linking opportunities for orphaned or under linked pages. Here's how it works:

Extracts Embeddings: The content of each page is converted into a vector embedding (a list of numbers), which represents the semantic meaning of the page.

Finds Related Pages: By calculating the cosine similarity between the embeddings of different pages, the code finds the pages that are most contextually similar to the orphaned or under linked pages.

Input List of URLs: These URLs are the pages you’ve identified as orphaned or under linked.

Checks for Existing Links: The script checks if a source page already links to the destination page. If no link exists and the pages are relevant, it suggests adding a new internal link (of course this is only needed for the under linked pages, but is good practice to ensure we're only making new linking suggestions).

Suggest New Links: If no link exists, and the related page is relevant, it suggests adding a new internal link.

Prioritises Opportunities: The script uses a custom relevance threshold to only suggest links between highly related pages, ensuring that the new internal links improve SEO and user experience by linking to relevant content.

What are Vector Embeddings?

Vector embeddings are numerical representations of words, phrases, or even entire pages that capture their semantic meaning. In the context of SEO and content analysis, embeddings allow us to represent the content of a webpage as a high-dimensional vector (a list of numbers) that reflects the topics, themes, and context of the page.

Instead of comparing pages by keywords, embeddings allow for more nuanced content analysis by measuring how closely two pages are related in terms of meaning, even if they use different words. This is especially useful for identifying content that’s semantically similar.

What is Cosine Similarity?

Cosine similarity is a method used to measure the similarity between two vectors (in this case, two pages represented by their embeddings). It calculates the cosine of the angle between the two vectors, which gives a value between 0 and 1:

A score of 1 means the vectors are pointing in the same direction, indicating that the pages are very similar.

A score of 0 means the vectors are completely different, indicating that the pages are unrelated.

Step 1: Run a crawl with extractions

The main set-up is the same as Gus explains, using Screaming Frog’s built in JavaScript extraction and the OpenAI API to extract embeddings from page content.

First ensure JavaScript rendering is enabled by going to Configuration > Spider > Rendering.

Then Configuration > Custom > Custom JavaScript > Add from Library > (ChatGPT) Extract embeddings from page content to set up your custom extraction for the embeddings.

Once that has been added, you just edit the JavaScript and add your OpenAI API key. You can create/find your API key here and keep an eye on it's usage here.

You will need OpenAI API credits in order to run the extractions, these are fairly inexpensive and you can typically run through thousands of URLs for less than $5. However, you can set up limits to ensure that you don't eat through credits.

Because we're using an API, there may be cases where the content is too long or the request times out. In this case the returned data will be an error message. Additionally, we will only see embeddings for textual content, so any images or scripts crawled will return an empty response - we will handle any of these cases within the script.

When running the crawl, it’s important to note that some of the pages that we’re looking for are going to be orphan pages so we need to ensure those are included in the crawl by including multiple sources such as your sitemap and GSC.

Once the crawl has finished you will see the URL and the extracted embeddings for each. The embeddings are essentially a list of long numbers.

In the context of using embeddings generated from a Screaming Frog crawl, the long numbers you see represent the high-dimensional vectors that encode specific characteristics of each webpage.

Exporting this report will give us the start of the data needed. You are welcome to review this file in excel or Google sheets, but we will do all of the data cleaning within Python, so you can just upload it straight to the Google Colab notebook (this is especially useful if you have a large dataset that may crash G-sheets or excel).

Step 2: Exporting all internal links

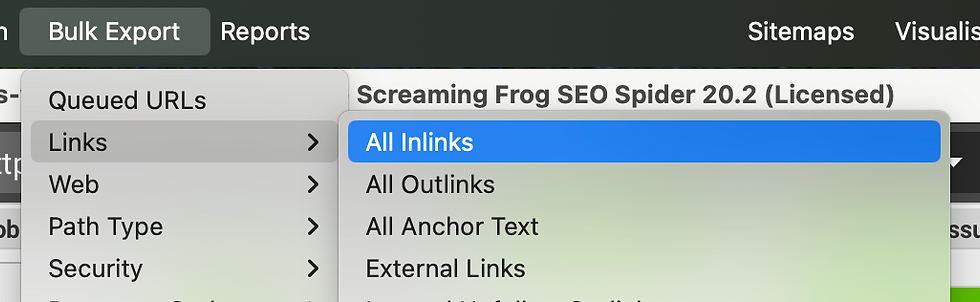

As we will be checking against current links across the site, we also need to export the full internal links report from Screaming Frog. This is easy to do by going to Bulk Export > Links > All Inlinks.

Step 3: The Script for finding relevant internal link opportunities

If you're interested in exploring what each part of the code does, I've detailed it below but if you're just interested in the script here are the changes that I made:

Data cleaning in Python (Instead of Excel/Google Sheets):

This includes:

Removing NaN values.

Filtering out any errors in the embeddings.

Excluding parameterised URLs (those containing ?) to focus on canonical URLs.

Filtering to only include Hyperlinks within the content

Renaming columns and removing any irrelevant columns

By doing this in Python, the process is more automated and scalable, eliminating the need for manual cleanup in tools like Excel or Google Sheets.

Checking against current internal links:

The script checks whether a Source page already links to a Destination page before suggesting new linking opportunities. This prevents duplicate links from being suggested.

Finding pages to add links to (for orphaned or under linked pages):

The main feature of the updated script is the ability to identify internal linking opportunities for specific orphaned or under linked pages.

A custom relevance threshold

The script also includes a relevance threshold of 0.7 to ensure that only the most related pages are considered for linking.

Relevance score:

The script also includes the relevance (cosine similarity) score for each identified related page. This helps prioritise linking opportunities based on how closely related the content is, ensuring more strategic and relevant internal links are added.

Datasets Used in the Script

The script works with two main datasets to find the best internal linking opportunities. We upload these at the beginning and clean the data before getting to the main part of the script.

1. List of Pages with Embeddings

We upload a list of pages that have embeddings generated from their content. These embeddings are used to calculate the cosine similarity between pages, which helps identify relevant internal linking opportunities.

The embeddings capture the semantic content of each page, allowing the script to find pages that are contextually related.

2. List of Internal links from a Crawl

We also upload the internal links from a site crawl, which provides information about which pages are currently linking to each other. This data allows the script to check if a link already exists between pages before suggesting a new one.

The crawl data includes the Source and Destination pages, along with additional metadata such as link type and position.

Why a custom relevance threshold of 0.7?

The use of a custom threshold allows us to fine-tune the relevance level needed to suggest a link, helping to avoid irrelevant or low-quality link suggestions.

0.7 is often a good default threshold for cosine similarity, as it indicates a reasonably high level of similarity between the two embeddings. Anything below this might suggest that the pages are less relevant to each other.

However, this is customisable, so you are welcome to update this where you see it in the code as

min_relevance=0.7What the relevance score represents:

In the case of our code, Cosine Similarity indicates the degree of similarity between the content of two pages.

The range of values sits between 0 and 1:

1 means that the two vectors are exactly the same, implying the content of the two pages is identical.

0 means that the vectors are completely different, meaning the content of the pages is unrelated.

Scores closer to 1 indicate that the pages are more similar in content, while scores closer to 0 indicate less similarity.

How is the relevance score calculated?

The relevance score looks at how similar the "topics" or "themes" of the pages are based on their numerical embeddings, regardless of how long or short the content is.

Why the relevance score matters:

High Relevance Scores (closer to 1) mean that the pages are strongly related in terms of content, making them good candidates for internal linking.

Low Relevance Scores (closer to 0) suggest that the pages are less related. Linking between unrelated pages could confuse users and search engines, so these are not good candidates for internal linking.

For example, if the cosine similarity (relevance score) between two pages is 0.9, this indicates that the pages are very similar, potentially discussing related topics. If the score is 0.3, the pages likely cover different topics, and linking them might not be valuable.

Adding pages to find related links for

Finally, the script has two ways to input the pages for which you want to find relevant internal links:

1. Add a List of URLs

You can manually provide a list of one or more URLs directly into the script to find linking opportunities. This is useful if you’re working with a smaller set of pages and want quick results.

urls = ['https://www.saveourscruff.org/available-dogs', 'https://www.saveourscruff.org/ways-to-get-involved']

best_link_opportunities_df = find_best_link_opportunities(urls, df, outlinks_df, min_relevance=0.7, top_n=10)

best_link_opportunities_df2. Upload a CSV File

If you’re working with a larger set of pages, you can upload a CSV file containing the list of URLs. This makes it easy to manage and process bulk pages without manually entering each one. Just ensure that the column heading is 'URL'.

print("Please upload the file containing the list of URLs")

uploaded = files.upload()

pages_df = pd.read_csv(list(uploaded.keys())[0])

urls = pages_df['URL'].tolist()

best_link_opportunities_df = find_best_link_opportunities(urls, df, outlinks_df, min_relevance=0.7, top_n=10)

best_link_opportunities_df

The output

Your output should look something like this:

URL: This is the orphaned or under linked page that we have defined or uploaded. These are the pages that you want to add internal links to.

Related URL: This is the potential source page where you could add an internal link to the orphaned or under linked page. These are pages that are contextually related and are good candidates to link from.

Relevance Score: This is the cosine similarity score that represents how closely related the Related URL is to the orphaned/under linked page. As we know, the higher the score (closer to 1), the more relevant the pages are to each other. You can use this score to prioritise which pages to link from, starting with those that have higher relevance scores.

You can export this output to a CSV file before reviewing it with a human eye and then following your next steps to get those links added. Remember that the suggested links should also make sense from a user experience perspective so we're not just linking based on relevance but also considering user flow and content hierarchy.

Ready to review the script? Here it is. I've added written steps for each part to make it easier to run.

(something you may notice is that I like to add comments to my code, this is to help understand what each thing does - it makes it easier for me to remember but is also helpful when sharing with others - I hope you find this helpful and not too annoying!)

Any feedback, please feel free to let me know (nicely) and if you use it I'd love to hear how you get on!

As I stated earlier, I have broken down the code and what each step does below if you're interested in digging into it more or re-engineering it to make it even better 😀

A detailed breakdown of the script

Converting Embeddings from Strings to Numpy Arrays

df['Embeddings'] = df['Embeddings'].apply(lambda x: np.array([float(i) for i in x.replace('[','').replace(']','').split(',')]))What This Does:

The embeddings in the DataFrame are stored as strings, for example, something like "[0.1, 0.2, 0.3]", However, for cosine similarity calculations, we need to convert these into numerical arrays.

This line of code converts each string of numbers into a NumPy array of floating-point numbers (decimal numbers), which will be used later for similarity calculations between pages.

Key Parts of the Code:

apply(lambda x: ...): This applies a function (defined using a lambda function) to each value in the Embeddings column.

replace('[','').replace(']',''): Removes the square brackets from the string to make it easier to split the elements.

split(','): Splits the string into individual elements separated by commas.

[float(i) for i in ...]: Converts each split string element into a floating-point number.

np.array(...): Converts the list of numbers into a NumPy array, which is an efficient format for mathematical operations like cosine similarity.

Function to Find Top N Related Pages Using Cosine Similarity

def find_related_pages(df, top_n=10, threshold=0.7):

related_pages = {}

embeddings = np.stack(df['Embeddings'].values)

cosine_similarities = cosine_similarity(embeddings)What This Does:

This function identifies the top N related pages for each URL in the dataset based on cosine similarity. It compares the embeddings (numerical representations of the page content) and calculates how similar they are, returning pages that are the most contextually related.

related_pages = {}: Initializes an empty dictionary to store the related pages for each URL.

embeddings = np.stack(df['Embeddings'].values): Stacks all the embeddings from the DataFrame into a 2D NumPy array, where each row represents a page's embedding.

cosine_similarities = cosine_similarity(embeddings): Calculates the cosine similarity between all pairs of pages using their embeddings. The result is a similarity matrix where each value indicates how related two pages are.

Filtering Pages by Similarity Threshold

for idx, url in enumerate(df['URL']):

similar_indices = np.where(cosine_similarities[idx] > threshold)[0] similar_indices = similar_indices[similar_indices != idx] What This Does:

This loop goes through each URL in the dataset and filters out any pages that do not meet the cosine similarity threshold (set to 0.7 by default). It also makes sure that the page doesn't compare itself by excluding it from the list of related pages.

for idx, url in enumerate(df['URL']): Loops over each URL in the DataFrame. idx is the index, and url is the actual URL string.

np.where(cosine_similarities[idx] > threshold)[0]: Finds the indices of all pages that have a cosine similarity score above the threshold (0.7) for the current page.

similar_indices[similar_indices != idx]: Ensures the current page is excluded from its own list of related pages by filtering out its own index.

Sorting and Getting Top N Related Pages

if len(similar_indices) > 0:

sorted_indices = similar_indices[cosine_similarities[idx]

[similar_indices].argsort()][::-1][:top_n]

related_urls = df.iloc[sorted_indices]['URL'].values.tolist()

related_scores = cosine_similarities[idx]

[sorted_indices].tolist() related_pages[url] =

list(zip(related_urls, related_scores)) What This Does:

If related pages are found, this code sorts them by their cosine similarity score in descending order and selects the top N most similar pages for each URL. It then stores these related pages along with their similarity scores.

if len(similar_indices) > 0: Only proceed if there are related pages that pass the threshold.

sorted_indices = similar_indices[cosine_similarities[idx][similar_indices].argsort()][::-1][:top_n]: Sorts the similar pages by their similarity scores in descending order and selects the top N (default: 10).

related_urls = df.iloc[sorted_indices]['URL'].values.tolist(): Retrieves the URLs of the top N related pages.

related_scores = cosine_similarities[idx][sorted_indices].tolist(): Retrieves the corresponding similarity scores for these pages.

related_pages[url] = list(zip(related_urls, related_scores)): Stores the top related pages and their scores in the dictionary under the current URL.

Return the Related Pages

return related_pagesWhat This Does:

The function returns a dictionary where each URL is a key, and the value is a list of its most related pages along with their similarity scores.

Find Best Link Opportunities

def find_best_link_opportunities(urls, df, outlinks_df, min_relevance=0.7, top_n=10):

all_link_opportunities = []What This Does:

This function identifies internal link opportunities for a list of URLs by finding related pages based on cosine similarity. It checks if the related pages already link to the URL and suggests new links for the pages that don’t have links.

Key Parts:

urls: The list of URLs for which internal linking opportunities need to be found.

df: DataFrame containing the page URLs and their embeddings.

outlinks_df: DataFrame with the site's internal links (Source-Destination pairs).

min_relevance: Minimum cosine similarity score for considering related pages (default = 0.7).

top_n: Number of top related pages to return for each URL (default = 10).

all_link_opportunities: An empty list to store the link opportunities across all URLs.

Ensure URLs are in List Format

if isinstance(urls, str):

urls = [urls]What This Does:

This part ensures that the URLs input is always treated as a list, even if only a single URL is provided.

Key Parts:

if isinstance(urls, str): Checks whether the input urls is a string (i.e., a single URL).

urls = [urls]: Converts the single URL string into a list, ensuring consistency for the upcoming loop.

Loop Through Each URL

for url in urls:

link_opportunities = {}What This Does:

This block loops through each URL in the list to find internal linking opportunities. It creates an empty dictionary for each URL to store the relevant link opportunities.

Key Parts:

for url in urls: Loops through each URL in the list of URLs.

link_opportunities = {}: Initializes a dictionary to store link opportunities for the current URL.

Get Related Pages Using Cosine Similarity

related_pages = find_related_pages(df, top_n=top_n, threshold=min_relevance).get(url, [])What This Does:

For each URL, the script finds the top N related pages using cosine similarity, ensuring only those pages that meet the relevance threshold are considered.

Key Parts:

find_related_pages(df, top_n=top_n, threshold=min_relevance): Calls the function to calculate cosine similarity and find the most relevant pages based on their embeddings.

df: DataFrame containing the page URLs and embeddings.

top_n: Returns the top N most relevant pages (default = 10).

threshold=min_relevance: Only returns pages with a similarity score above the threshold (0.7).

.get(url, []): Retrieves the related pages for the current URL or returns an empty list if none are found.

Check for Related Pages

if not related_pages:

print(f"No related pages found for {url}")

continueWhat This Does:

If no related pages are found for a given URL, the script prints a message and moves on to the next URL in the list.

Key Parts:

if not related_pages: Checks if no related pages were found for the current URL.

print(f"No related pages found for {url}"): Outputs a message indicating no related pages were found.

continue: Skips the rest of the loop for the current URL and moves to the next URL.

Check for Existing Links and Relevance Score

for related_url, score in related_pages:

outlinks_for_page = outlinks_df[outlinks_df['Source'] == related_url]['Destination'].values

if url not in outlinks_for_page:

if score >= min_relevance:

link_opportunities[related_url] = scoreWhat This Does:

For each related URL, the script checks whether a link already exists from the related URL to the current URL. If no link exists and the relevance score meets the threshold, the related URL is stored as a link opportunity.

Key Parts:

for related_url, score in related_pages: Iterates through each related page and its similarity score.

outlinks_for_page = outlinks_df[outlinks_df['Source'] == related_url]['Destination'].values: Retrieves the list of destination pages that the related URL links to.

if url not in outlinks_for_page: Checks if the current URL is not already linked from the related URL.

if score >= min_relevance: Ensures that only highly relevant pages (above the 0.7 threshold) are considered.

link_opportunities[related_url] = score: Adds the related URL and its relevance score to the dictionary of opportunities.

Create a DataFrame for Link Opportunities

if link_opportunities:

opportunities_df = pd.DataFrame(list(link_opportunities.items()), columns=['Related URL', 'Relevance Score'])

opportunities_df['URL'] = url

opportunities_df = opportunities_df[['URL', 'Related URL', 'Relevance Score']]

opportunities_df = opportunities_df.sort_values(by='Relevance Score', ascending=False)

all_link_opportunities.append(opportunities_df)What This Does:

For each URL, if any valid link opportunities are found, they are converted into a DataFrame. The DataFrame is sorted by relevance score and then appended to the list of all link opportunities.

Key Parts:

list(link_opportunities.items()): Converts the dictionary of link opportunities into a list of tuples.

columns=['Related URL', 'Relevance Score']: Defines the column names of the DataFrame.

opportunities_df['URL'] = url: Adds a column indicating the current URL that the opportunities are for.

sort_values(by='Relevance Score', ascending=False): Sorts the DataFrame by relevance score in descending order.

all_link_opportunities.append(opportunities_df): Appends the DataFrame of link opportunities for the current URL to the list of all link opportunities.

Combine Results and Return

if all_link_opportunities:

final_opportunities_df = pd.concat(all_link_opportunities, ignore_index=True)

return final_opportunities_df

else:

return pd.DataFrame()What This Does:

After all URLs have been processed, this block combines the link opportunities for each URL into a single DataFrame and returns the result. If no opportunities are found, it returns an empty DataFrame.

Key Parts:

pd.concat(all_link_opportunities, ignore_index=True): Combines the DataFrames for all URLs into a single DataFrame.

ignore_index=True: Ensures the index is reset for the combined DataFrame.

return final_opportunities_df: Returns the combined DataFrame of all link suggestions.

return pd.DataFrame(): If no link opportunities were found, returns an empty DataFrame.

Ways to add URLs to run through the script

Method 1: Manually Provide a List of URLs

urls = ['https://www.saveourscruff.org/available-dogs', 'https://www.saveourscruff.org/ways-to-get-involved']

best_link_opportunities_df = find_best_link_opportunities(urls, df, outlinks_df, min_relevance=0.7, top_n=10)

best_link_opportunities_dfWhat This Does:

In this method, you manually provide a list of URLs that you want to find internal linking opportunities for. This is useful when you’re working with a small set of pages and don’t need to upload a file.

Key Steps:

urls: A list of URLs is created by manually typing them into the script.

find_best_link_opportunities: The function takes this list and looks for internal link opportunities for each page.

best_link_opportunities_df: The results are stored in a DataFrame showing the best opportunities for internal linking.

Method 2: Upload a CSV File of URLs

print("Please upload the file containing the list of URLs")

uploaded = files.upload()

pages_df = pd.read_csv(list(uploaded.keys())[0])

urls = pages_df['URL'].tolist()

best_link_opportunities_df = find_best_link_opportunities(urls, df, outlinks_df, min_relevance=0.7, top_n=10)

best_link_opportunities_dfWhat This Does:

In this method, you upload a CSV file containing a list of URLs. This is useful when you’re working with a large number of pages, allowing you to bulk-process them without manually typing in each one.

Key Steps:

Upload CSV: The script prompts you to upload a CSV file containing the URLs you want to analyze.

pages_df['URL'].tolist(): The script reads the CSV file and extracts the URLs into a list.

find_best_link_opportunities: The function takes this list of URLs and finds internal linking opportunities for each page.

best_link_opportunities_df: The results are stored in a DataFrame, similar to the manual method.

Both methods achieve the same result—the difference is whether you provide the URLs manually or upload them in bulk using a CSV file. This gives you flexibility depending on the size of the project you're working on.

Thank you - this is helpful and will speed up the process for large sites. Very much appreciated!